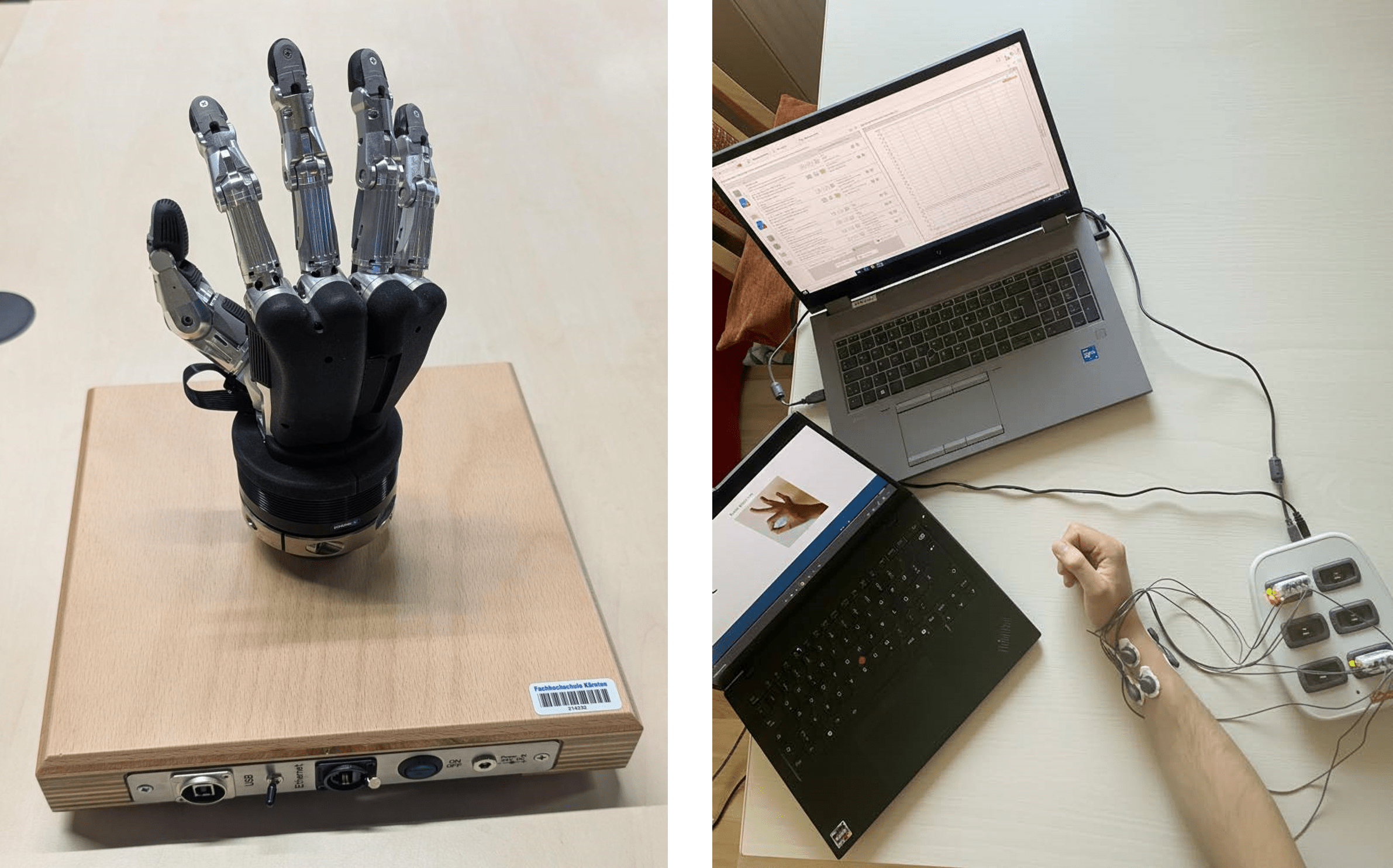

Hi, I’m Max! I recently graduated from the Carinthia University of Applied Sciences with a degree in Medical Engineering and Analytics. For my master’s thesis, I worked on a project that focused on controlling a robotic hand in real time using the electrical activity of human muscles.

Collecting the Data and Convincing Friends to Help

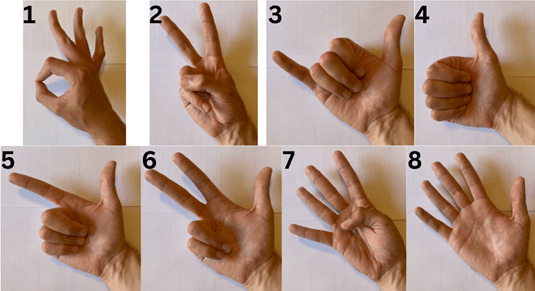

Before I could make the robotic hand move, I needed plenty of data. So, I asked my friends and colleagues to lend me a hand (intended wordplay). They performed eight different hand gestures while I recorded the electromyography (EMG) signals from their forearms.

Once I had enough data, I trained a neural network to recognize the gestures. The results were pretty encouraging: the model correctly classified test signals about 80% of the time.

From Recorded Data to Real-Time Control

Of course, recognizing prerecorded signals is one thing. I wanted the system to respond to live muscle activity from my own arm. After wrestling with some programming bugs and a few database issues, I finally managed to get the real-time pipeline running.

The results? The robotic hand performed the correct gesture around 50% of the time. Some gestures were clearly easier to classify than others.

Looking Ahead

While there’s definitely room for improvement, I’m proud of how far the project has come. It’s exciting to see how human muscle signals can be used to control robots, and I hope future students will continue building on this work. Maybe one day, a robotic hand can perform every gesture a real human hand can do without many errors. This would help so many amputees out around the world.

Have a great one!

Max