This posts present a bachelor project of Mr. Fakia. Let´s look at what he has to tell us.

Yours,

MedTech @ FH Kärnten Team

The brain is a very complex entity, it took many decades to even grasp how some of its core functions work, despite the great achievements done in the last century to the field of neuroscience, a lot about the brain is still a big mystery. Many tools have been developed to assist one with understanding both the magnetic and the electrical impulses occurring in the brain, one of these tools is the Electroencephalography (EEG). EEG captures the electrical brain activity using electrodes that are attached to the scalp, making it a non-invasive and much safer option to diagnose brain-related disorders in comparison to other popular apparats such as MRI or CT. The EEG electrode captures the firing of the brain cells (neurons) at a specific cortex region (for example the Frontal Lobe ), and then it registers the voltage at that specific time, resulting in a 2-Dimensional data recording (time, voltage) of multiple cortex regions. To help visualize these data, a method known as EEG topographic mapping is usually applied to demonstrate a heatmap of the active regions of the brain during a specific event (e.g., eyes-closed event). This allows a better overview of the dataset, in turn makes it visually more interesting and it can be even used to draw conclusions about the correlations of the brain activity between the cortex regions.

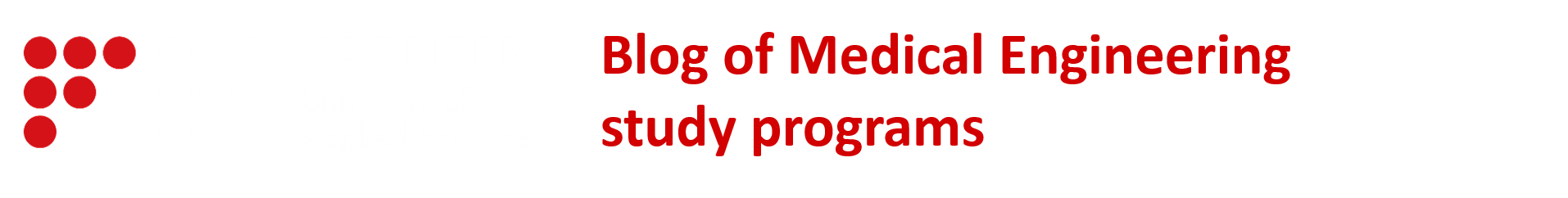

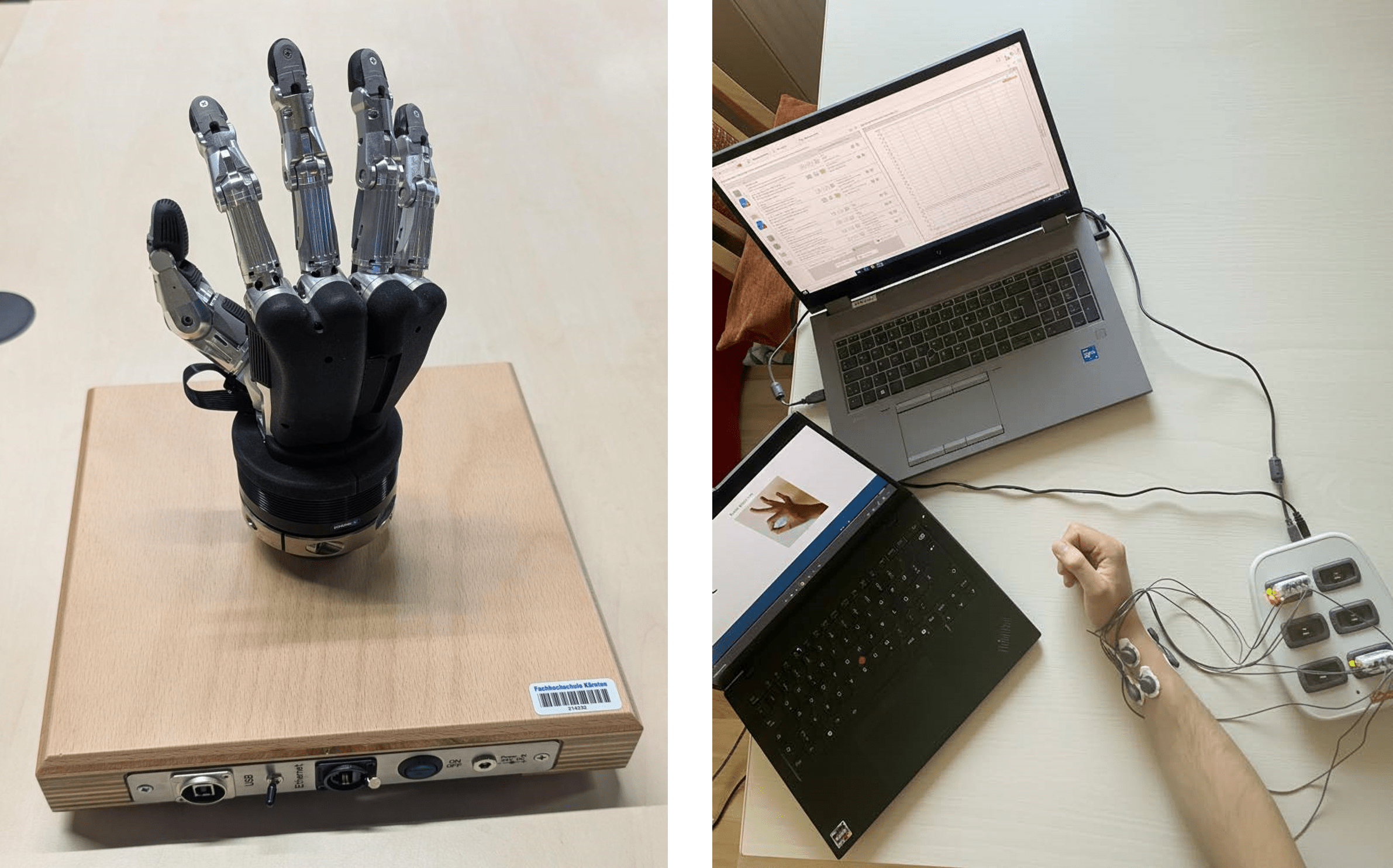

Since there are only a handful of electrodes used in the EEG recordings, a technique known as spatial interpolation is required to predict the missing points between the known channel values. The result is a head-shaped 2D image of the electrode distribution alongside a colormap (heatmap) showing the active regions of the brain from a transversal topview position. However, this method has been used for a very long time now, and despite it being the standard to spatially visualize EEG data, in particular stimuli (events), the idea to extend the visualization to a 3rd or a 4th Dimension (Virtual/Augmented Reality) was never really explored. The idea of my project is to incorporate the use of augmented reality to enhance the visualisation and understanding of EEG data, thus providing a much more interesting perspective into the potential future of both technologies. To achieve such milestone, it is important to understand the limitations of the EEG and its spatial resolution. The EEG doesn’t depict any “physical” structures but rather invisible electrical powers. Therefore, a 3D head model is required for demonstrating the electrical activity as a heatmap, where the electrode positions of the used EEG device are projected onto this 3D model. The surface of this 3D model acts as the heatmap/colormap ”image”, essentially where the activity is displayed as a colour. Following this process of interpolating and projecting the EEG data onto the 3D head model, the whole 3D scene can then be streamed to an augmented reality supported device such as the HoloLens. The creation and streaming of this 3D scene are done in the game engine Unity, which provides a wide variety of tools and libraries to enable the AR/VR pipeline as well. The result of this project is a 3D model integrated into an interactive AR scene, demonstrating the increase of a specific brain activity, the so-called alpha-frequency, during the rested eyes-closed condition. This 3D model can be interactively moved around, rotated, and its scale changed with simple hand-movements.